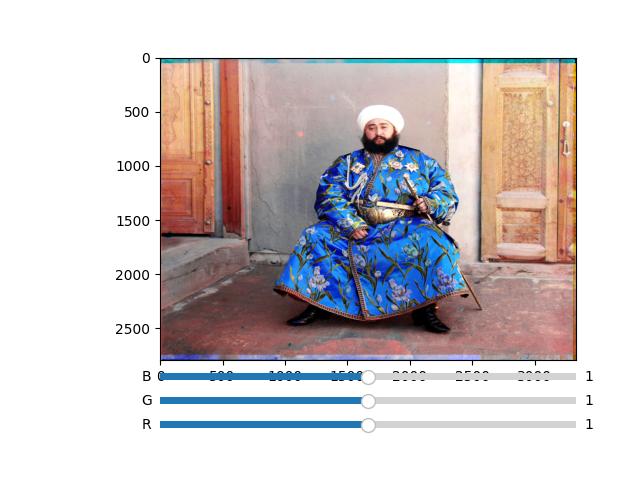

Phase cross correlation shifts focus from searching for RGB similarities in the visual pixel domain to

analyzing the frequency domain. In this approach, we compare the frequencies to detect similarities.

Initially, I utilized a standard version of the function for testing. Satisfied with the results, I

decided to implement an aligning algorithm, that aligns the initial images using phase cross

correlation. After that, I decided to use the image sliding approach to fine-tune the results by

applying image sliding on the initially aligned images. With this way, I was able to achieve

satisfactory alignment results in great efficiency. Encouraged by this improvement and learning that it

would be extra credit worthy, I delved into the algorithm's principles to understand and implement it

myself as it was mentioned to be part of

the B&W on Ed. Below, I have shared the custom function I coded, where I also explain the underlying

logic.

FB = fft2(base_image)

F1 = fft2(image_1)

F2 = fft2(image_2)

Finding the FFT of the images allows us to carry the images from the visual pixel domain to the

frequency domain.

cross_power_spectrum_1 = (FB * np.conj(F1)) / np.abs(FB * np.conj(F1))

cross_power_spectrum_2 = (FB * np.conj(F2)) / np.abs(FB * np.conj(F2))

Calculating the cross power spectrum and normalizing the calculations are important because it

enables us to access the phase differences between images, which is helpful because by this way,

we eliminate the influence of intensities of pixels and focus on the geometrical composition of

the

images when searching for shifts.

correlation_1 = fftshift(ifft2(cross_power_spectrum_1))

correlation_2 = fftshift(ifft2(cross_power_spectrum_2))

When we take the cross power spectrums to the visual pixel domain back again by applying inverse

FFT and shift the zero-frequency component to the center of the resulting matrix, we are

essentially

making it intuitively easier to find the peak from the correlation matrix by centering it from

the corners.

maxloc_1 = np.unravel_index(np.argmax(np.abs(correlation_1)), correlation_1.shape)

maxloc_2 = np.unravel_index(np.argmax(np.abs(correlation_2)), correlation_2.shape)

Then, we search the matrices for the peak in order to find the information for the correct shift

to align the images.

shift_x1 = maxloc_1[1] - base_image.shape[1] // 2

shift_y1 = maxloc_1[0] - base_image.shape[0] // 2

shift_x2 = maxloc_2[1] - base_image.shape[1] // 2

shift_y2 = maxloc_2[0] - base_image.shape[0] // 2

The purpose of the fftshift becomes clearer as we subtract the center of the image from the

peak coordinates to find the pixel shifts for aligning the images.